Predicting the Next Best View for 3D Mesh Refinement

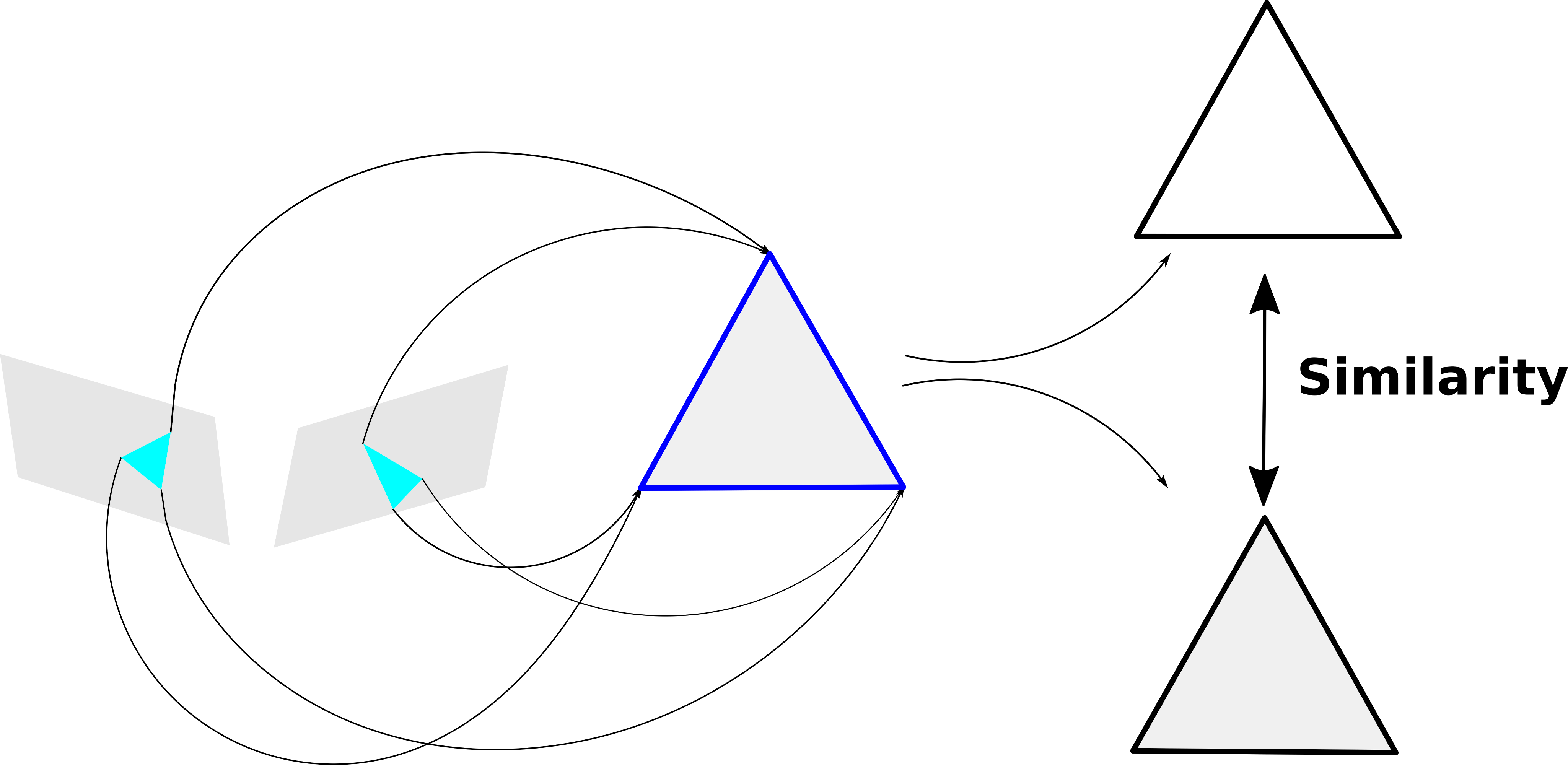

Abstract: 3D reconstruction is a core task in many applications such as robot navigation or sites inspections. Finding the best poses to capture part of the scene is one of the most challenging topic that goes under the name of Next Best View. Recently many volumetric methods have been proposed; they choose the Next Best View by reasoning into a 3D voxelized space and by finding which pose minimizes the uncertainty decoded into the voxels. Such methods are effective but they do not scale well since the underlaying representation requires a huge amount of memory. In this paper we propose a novel mesh-based approach that focuses the next best view on the worst reconstructed region of the environment. We define a photo-consistent index to evaluate the model accuracy, and an energy function over the worst regions of the mesh that takes into account the mutual parallax with respect to the previous cameras, the angle of incidence of the viewing ray to the surface and the visibility of the region. We tested our approach over a well known dataset and achieve state-of-the-art results.

Related Links

[PDF][CODE] L. Morreale, A. Romanoni, M. Matteucci. Predicting the Next Best View for 3D Mesh Refinement @ IAS-15

Bibtex

@inproceedings{morreale2018predicting,

title={Predicting the Next Best View for 3D Mesh Refinement},

author={Morreale, Luca and Romanoni, Andrea and Matteucci, Matteo},

booktitle={International Conference on Intelligent Autonomous Systems},

pages={760--772},

year={2018},

organization={Springer}

}